Talking Human: The Rise of Natural Language Processing (NLP)

Author's Note: This post was was first shared on Radical Venture's website in August 2020

It is difficult to overstate the importance of advances in Natural Language Processing (NLP). In 2012 an artificial intelligence (AI) research breakthrough in computer vision known as AlexNet precipitated a new era of AI innovation. It was a watershed moment for AI, but business applications remained limited to vision-related use cases. Advances in NLP, on the other hand, will have far-reaching impacts that cut to the heart of every business: the customer. Any spoken conversation, digital exchange, invoice, or legal contract offers data relevant to better understanding customers. And any future customer touchpoint stands to benefit from tailored language models that can support interactions and derive insights. To be clear, we are in the very early innings of NLP innovation, but investment opportunities are already emerging.

OK Computer

The need to “speak computer” is no longer a barrier to leveraging machines. For the first time in history we are able to communicate with computers in the same way we speak to each other. Gone are the days of relying on keywords and specific syntax designed to activate an algorithm. We can talk to virtual assistants in our phones, receive curated purchasing advice from chatbots, and let autocomplete features assist with our searches, write our emails, and ask our questions. All of these are examples of NLP in action.

While the roots of computer-assisted language generation date back to the 1950s, the current wave of NLP applications stem from breakthroughs in AI research over the last decade. Earlier this year, the world was given a sneak peek at what the next wave of NLP innovation might look like. In May, OpenAI announced a 175 billion parameter language model, GPT-3. Released as a beta to global headlines, GPT-3’s text generation engine has been used to write short stories, technical manuals, songs, and even computer code. As corporations explore novel use cases, investors are tasked with determining where this technology will find market traction.

Despite a looming peak in the NLP “hype cycle”, we expect NLP innovations to follow a similar path to computer vision technologies. While it may be a few years before we see superhuman use cases emerge — it was several years after AlexNet when deep learning algorithms outperformed radiologists in the detection of specific pathologies— we believe there are clear and significant opportunities emerging in the NLP field today. The release of GPT-3 underlines the potential for NLP technologies to impact multiple industries and serves as a useful benchmark for evaluating NLP as a stand-alone market category.

From a macro perspective, four major trends are driving demand for effective NLP applications:

Digitization of social interactions: the proliferation of online interactions has allowed for collection of larger datasets and sentiment analysis;

Accelerated remote interactions with customers: COVID-19 spurred virtual customer engagements through call centres and chatbots, and data from these conversations can now be analyzed;

Increasing global labour costs: continued wage increases push highly manual processes toward automation; and

Data comprehension: as global markets become increasingly competitive, companies need to better leverage their data to offer superior products.

NLP (loosely) defined

NLP represents an overlapping field of computer science and linguistics, encompassing natural language understanding and generation. Simply put, NLP is the study of how computers understand the ways in which we communicate. Within the category of voice technologies, NLP represents the middle “thinking” step (between speech recognition and speech generation), that powers many of the voice applications we use every day. After slow progress for decades, like many other fields of AI, NLP re-emerged in the 2000s with the application of deep learning — a technology pioneered by Turing Award recipient, and Vector Institute Chief Scientific Advisor, Geoffrey Hinton. More recently, remarkable developments in the technical architecture, which power NLP accuracy, have unlocked new and exciting use cases in our everyday lives.

Autobots Assemble

Language is one of the most complicated cognitive functions of the human brain. For AI neural networks, NLP represents similar complexity. NLP networks look at enormous amounts of linguistic data to parse the morphology of words, the syntax of sentence structure, the semantics of phrases, and the pragmatics of context. Together, these help generate language model capable of predicting applicability of words and phrases in a given context. Given the complexity, effective NLP processes require significant amounts of data and compute power. The current pace of innovation in NLP can be attributed to the emergence of custom silicon, paired with significant algorithmic breakthroughs.

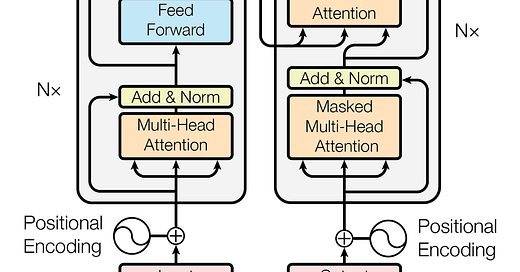

Most transformational, however, is the concept of Transformer architecture (no, not those Transformers), invented by a Google Brain team in June 2017 (one of whom has since started an NLP platform company, Cohere, in which Radical Ventures is the sole founding investor). Transformers drastically improved the efficacy of downstream tasks by leveraging “attention” mechanisms, which allocates importance to various portions of an input sequence of words. This architecture is now standard across NLP solutions, and has unlocked many new use cases.

Transformers Architecture:

Technological breakthroughs that dramatically improve the accuracy of NLP models, correlate closely to the expansion of NLP applications and business adoption. While estimates vary, the field of NLP likely represents a US$10 billion market today, growing at upwards of 30% per year. Much of the market currently resides in North America, with increasing global adoption expected in the near term.

NLP applications are increasingly replacing analog solutions, streamlining operations, and driving efficiencies. While NLP use cases have found early successes in enterprises by cutting costs, incremental insights driven by NLP will provide opportunities to accelerate existing revenue channels and open new and distinct business lines. Like other areas of AI, we believe that nearly all businesses will eventually leverage NLP solutions in their day-to-day operations. With that in mind, understanding what solutions look like today is critical to getting ahead of how NLP will impact business in the future.

So what? Has this ever made it out of research papers?

Today, most of the investment in NLP models and algorithms on a commercial scale is being driven by Big Tech. Amazon, Google, Microsoft, and IBM all offer API-based NLP solutions designed to comprehend, translate, and analyze natural language. Academia continues to research language models and a countless number of smaller players remain focused on industry or function specific NLP solutions. Given the cost required to train the large language models that power these solutions, smaller cloud providers have struggled to keep pace with the bigger players. Meanwhile, larger tech companies continue to acquire talent in the space to fill holes in their talent pools and tech stacks.

When. it comes to picking between providers, there are several criteria that are considered:

Efficacy: generally measured by both accuracy (how well a model classifies text) and perplexity (how well a model predicts a sample). Defining impact is often determined by downstream use cases, therefore understanding what is most applicable to the business-need is critical.

Speed: how quickly a provider can start solving business tasks? This criteria is dependent on the initial training speed of the model (start-up time), as well as the speed/latency of the application in use.

Cost: most current providers (including Big Tech solutions) offer a freemium model that eventually charges by volume (e.g. number of processed characters). Most providers offer enterprise level contracts that cap customers at certain volume levels.

Data Security / Privacy: will it be an on-prem solution, or leverage a cloud-based API? Where does the data itself reside? Who owns the data being analyzed? This is an instance in which start-ups / small providers have an advantage over Big Tech, particularly if customers compete with Big Tech providers in other categories.

In addition to Big Tech players, the big “independent” in the space is OpenAI, an AI research lab founded by Elon Musk, Sam Altman, and AlexNet co-creator Ilya Sutskever (among others) in 2015. While largely independent, OpenAI received a US$1 billion investment from Microsoft in June of 2019, which it has used to fund the development of its massive language model, GPT-3. Today, GPT-3 dwarfs other language models on the market, and OpenAI has already begun partnering with companies such as Casetext, MessageBird, and Reddit to pilot using the model to solve problems in areas like semantic search, customer service, and content moderation.

Finally, open source libraries such as Hugging Face provide communities for developers and scientists to build natural language models and processing technologies together. Today, Hugging Face has over one million installs from AI researchers, NLP startups, and Big Tech developers, offering pre-trained Transformer models to the public. By circumventing the expensive costs of training these models, providers like Hugging Face have brought access to cutting edge technologies out of the lab and into the hands of the public. Going forward, resources like this will continue to push up the requirements for monetizable models and competitors in the space.

Who’s Who

While the market for NLP is expanding, it remains constrained by a lack of cutting-edge talent in the field. Finding individuals with both deep learning and Transformer architecture expertise is exceptionally rare. Today, most of this talent resides within Big Tech, a few players like OpenAI, and elite AI research institutions.

In addition to the Bay Area, Canada has emerged as a key hub for AI research talent. Given the access to researchers at the Vector Institute for AI, MILA, the University of Toronto, and a significant number of Big Tech research arms such as Google Brain, Toronto and Montreal have a critical mass of talent in a globally scarce talent pool. Within the NLP universe, qualified, high-end talent is emerging as the key bottleneck to expansion, and Canada’s ecosystems appear well positioned to capitalize on this opportunity in the years to come.

Show me the money

As we look for opportunities to invest in NLP, we believe there are several key factors that will contribute to strategic exits, and ultimately outsized returns:

Steps 1-3 are academically interesting and technically challenging, however significant value creation resides in the ultimate applications of a model. While we expect model processing to be an arms race that involves large amounts of computational spend, we believe there are opportunities for startups to compete by specializing in steps 1-2, both horizontally (by application) and vertically (by industry/end-market).

Much of the hype in the space has surrounded the enormous growth in parameters of large language models. Such models, however, are increasingly expensive to train and –– at a certain point –– deliver diminishing returns on performance. In building effective NLP applications, the size of the model is not, necessarily, the only factor contributing to performance. Once pre-trained models are of sufficient scale, training them on task-specific datasets tends to significantly improve results. Given this nuance, we don’t believe that the NLP market is a winner take-all structure. Rather, there will likely be multiple winners across both horizontal application development and vertical end-market plays.

As we look for opportunities to invest in NLP, we believe there are several key factors that will contribute to strategic exits, and ultimately outsized returns:

Flywheel benefits from models that improve their applications over time by leveraging a large common data model; vertical providers that develop a large trove of valuable proprietary data;

Players with world class IP/patent protection; and/or

Traditional strategic advantages (such as brand or exclusive partnerships).

While we expect there to be several big winners in NLP going forward, Radical Ventures has already invested in a world-class NLP team based in Canada.

Betting on Cohere

In September 2019, Radical invested as the first capital into Toronto-based Cohere. Cohere is developing a model of unstructured language data that is looking to power a wide variety of NLP applications. Cohere is focused on developing proprietary solutions for better data scraping and model training, which would enable it to outperform larger language models in discrete horizontal and vertical subcategories, at a fraction of the training costs.

Our investment in Cohere was based on our enthusiasm for the extraordinary team they are building in the space. The company has extensive knowledge of Transformer architecture, making it one of the only teams in the world qualified to solve cutting edge natural language problems. Specifically, Cohere co-founder & CEO, Aidan Gomez, co-authored the breakthrough Google Brain paper “Attention is All You Need,” which pioneered the implementation of Transformer architecture. One of the company’s other co-founders is Geoffrey Hinton protégé Nick Frosst.

We believe Cohere is uniquely positioned to become a leading platform in the dynamic and fast-growing NLP space. As the adoption of NLP becomes increasingly widespread, and more businesses begin to implement the technology, Cohere has the opportunity to revolutionize the way business is done.

Talking Human

Perhaps it is appropriate that we give the final word on the potential of NLP to the technology itself. Cohere has created an NLP plug-in that creates predictive text based on what you write. After typing “The Natural Language Processing market has the potential to” into the Cohere API, it suggested the following: “…increase dramatically in the coming years. The ability to create a well-structured data model is a must for any company. This is especially true for NLP.”