The Modern ML Stack is broken, but it won't be for long

Or, what the most important technological breakthrough of our generation can learn from cable television

It seems like in the last few weeks everyone on planet earth has woken up and realized the AI revolution is here, and we might be about to see the largest wave of business disruption since the rise of the internet. However, what you won’t see behind the slick UI of ChatGPT is the gigantic mess of infrastructure that’s needed to make it tick. While a gigantic, well funded organization like OpenAI or Big Tech companies can brute force their way into building by hiring armies of ML engineers and infrastructure engineers, the rest of the global economy cannot. If modern organizations are really going to participate in the AI revolution, someone is going to need to help them build it.

Same same, but different

Most people are familiar with the rise of the “Modern Data Stack”, in which organizations are now able to capture and leverage the proprietary data that they receive from their customers. This was the outcome of the “Big Data” revolution of the 2010’s, and powered the emergence of arguably the most successful startup of the decade, Snowflake. As technology reached a point in which businesses could actually capture their data (~2010), data proliferation necessitated a change in the way that we actually worked with it (image from Statista):

Gone were the days of gut-based decision making, and in came the rise of the “data driven” manager. Suddenly business leaders could actually track what did and didn’t work, and access to this data quickly became table stakes. This was (and continues to be) a key secular driver behind the pivot to cloud-based software and the rise of the global cloud providers: all of a sudden everybody wanted more and more data, but there was nowhere to store it.

AI might be having a similar moment today. With the emergence of a “killer app” (ChatGPT), all of a sudden everyone on earth wants access to this same technology. Unfortunately, similar to the pre data warehouse era, the infrastructure to build and leverage this technology is limited. AI also needs data, but it needs to use it in different ways than what the Modern Data Stack offers. If modern businesses are going to benefit from the AI revolution the same way they benefitted from the Big Data revolution, they’re going to have to be able to leverage and utilize their own proprietary data.

Not so fast

Today, most breakthrough AI models are trained on the same dataset: the internet. While this is useful in isolation, the real holy grail of insights for business leaders is using their own proprietary data, either by training and serving their own models or fine tuning general purpose ones (foundation models).

To do that, however, is much easier said than done. Given its roots in academia and research and the speed of innovation in the last five years, the ability to build in AI is far from enterprise grade today. ML development tools from the global cloud providers (e.g., Sagemaker) are not core to their business and have largely been an afterthought: it’s hard to build production grade products with them alone. As such, the first generation AI companies were forced to build everything they needed in-house (notably autonomous driving companies and the rest of Big Tech). As ML teams in these firms matured, a generation of startups emerged to provide a variety of point solutions so that new companies wouldn’t have to build everything themselves. This brings us to today, where the proliferation of point solutions has brought us to this (landscape image from our friends at a16z):

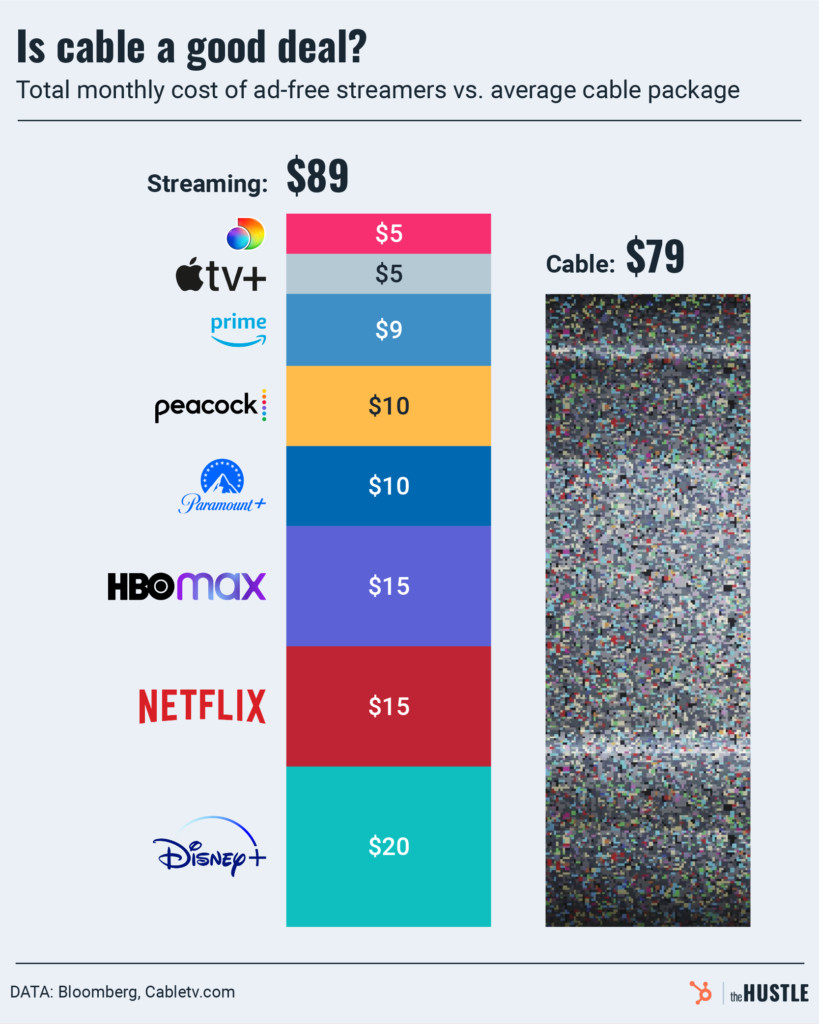

In short, it’s a mess. If you want to build production grade products in ML, you need to duct tape together 10+ point solutions to stand up an infrastructure that can get you into production. With that comes obvious issues with cost (paying too many vendors), unwieldly-ness (painful to onboard and train new hires), and brittle-ness (multiple single points of failure, high friction integrations, frequent breakages and downtime as new updates are pushed out to one end point solution). What began as a few “must have” point solutions that outperformed one-off Sagemaker tasks has transformed into a problem in itself. It’s a bit of a heavy handed (and overused) analogy, but it reminds me of what we’re seeing in the consumer media industry (image per The Hustle):

Jim Barksdale (former President and CEO of Netscape) famously said that there are “only two ways to make money in business: One is to bundle; the other is unbundle.” The analogy was true in the emergence of the cable television era (when networks bundled together local and national channels) as consumers converted to cable television. As cable got bloated and too expensive, consumers flocked to over-the-top (OTT) streaming providers such as Netflix that provided better value (unbundling). Today, however, as OTT providers have proliferated, a full cable cutter stack has become more expensive than traditional cable. We’re in the early innings of this evolving, but companies are already trying to find ways to “rebundle” offerings by combining adjacent channels and services (e.g., Amazon Prime & Prime Video, Disney+ & ESPN, Hulu & Spotify). TBD on how this plays out in the near term but I imagine the seesaw between unbundling and rebundling will continue in some way shape or form for the foreseeable future.

Analogies only go so far

Unfortunately, where the above (perhaps lazy) analogy falls apart is that ML infrastructure has another problem: nobody makes any money. Or, at least, nobody makes enough money to warrant the amount of VC dollars that have been dumped into the space in the last few years. According to Pitchbook, of the $90B of VC dollars that has been poured into AI software so far (excludes hardware and Autonomous Vehicle companies), more than 30% ($27.4B) has been poured into Horizontal Technology / infrastructure: that’s a significant amount given the distinct lack of $100M+ ARR players that have emerged in the space.

In the traditional modern data stack, large enterprises are already used to paying fairly large sums to ETL providers, Data Warehouses, and BI tools (several startups in this space have crossed the $100M ARR threshold, while several others are approaching it). There’s many reasons for this, but one of the main ones is that the Big Data trend has been around long enough that most of these Modern Data Stack startups have their roots in engineering and production quality systems. These solutions are battle tested and Enterprise Ready, and are tackling C-suite priorities that unlock clear customer willingness to pay. Executives know they need to invest in their data stack to stay relevant, and the solutions are mature enough to be deployed in the most complex and demanding environments.

Bringing this back to ML Infra, ChatGPT’s emergence has clearly captured the attention of C-suite executives at Fortune 500 companies, who are now eager to integrate ML capabilities into their own organizations. Where it didn’t exist a few weeks ago, the demand side of the equation is now taking off. However, on the supply side, the proliferation of available point solutions is a messy, complicated, experience that is distinctly not ready for turnkey enterprise deployment.

Most ML infrastructure products in market today were designed by and for highly technical ML engineers, researchers, and academics. They require significant technical knowledge to operate and often lack or have brittle solutions to critical enterprise requirements such as integrations with IT infrastructure, SOC2 compliance / data security, and private cloud deployment. Notably, as many of these solutions emerged out of research and academia, they also frequently emerge as open source offerings, which Fortune 500 are often hesitant to adopt (note: you certainly can certainly build giant Open Source businesses, like Confluent, but it requires a tight GTM motion and an immediately available and strong Managed Solution, of which most open source ML infra companies lack today).

In order to be considered Enterprise Ready, AI infra startups are going to need to solve these data security and IT integration problems before they even begin their sales motions with Fortune 500 customers. As excited as they are about AI, many Fortune 500 companies are increasingly concerned about data security and liabilities, and aren’t going to skim on their compliance requirements to try out new technology (in fact, we’ve heard increasing stories of large orgs explicitly banning the use of ChatGPT as private client data is being inadvertently shared with OpenAI). You can get around some of the above data and IT security requirements when you sell into other fast moving AI startups, but eventually you need to graduate to larger customers with more complex needs (and larger ACV’s) if you want to reach real venture scale. In an economic downturn where many of these startups run out of runway or cutback spend, this effect only becomes more pronounced.

In short, our belief is that if you’re going to build and scale a sustainable infrastructure software business (particularly in this economic climate), you need to be able to sell into blue chip, Fortune 500 customers. To do that, you need to be Enterprise Ready and need to be able to regularly interact with and work with non-technical buyers. Until a few weeks ago, these buyers weren’t that interested in deploying real dollars to build their own ML orgs. That’s changing and the current landscape of offerings are going to struggle to meet these buyers’ requirements.

So the sky is falling - how does this get fixed?

Prelude aside, VC’s are supposed to be optimists. We are big believers that we’re in the early innings of the greatest period of technological innovation since the emergence of the internet 20+ years ago. The AI revolution that began with the Deep Learning breakthroughs in 2012 has hit the mainstream, and a common tagline at Radical is that we believe that “AI is eating software”, and that we’ll see almost all of the world’s software replaced by AI over the next decade as static code bases are largely replaced by constantly evolving AI models. This secular shift is a runaway train that will displace whatever issues stand in its path. In short, all of the above issues regarding the state of ML infrastructure will get fixed: they have to be.

How does this happen? First of all, something has to be done about the existing state of ML infrastructure. While some point solutions will naturally die off, our belief is that a handful of centers of gravity will start to emerge where offerings are consolidated. This might happen via M&A where the most well-capitalized players can scoop up valuable adjacent offerings, or via product development and cross-selling as Companies that own the most customer relationships are able to naturally expand their offerings. One area that we view as a strategic position in the stack is Labeling, which is typically the first thing a new customer looks to as they think about adopting ML and building their own models. By owning the first and longest standing relationship with customers, these are natural organizations to offer adjacent solutions to customers as they mature along their ML adoption journey. Snorkel has started to run away with this market in the world of text, while players like Scale AI and Radical portfolio company V7 are starting to quickly do the same in the unstructured data like photos, videos, and audio.

Secondly, we’re seeing increased customer demand for new end-to-end (E2E) infrastructure platforms (rebundling!) that can can help people easily build ML applications. Some “AI 1.0” organizations like Domino Data Lab and H2O.ai provide E2E infrastructure for the pre-transformers generation of AI, but we believe the much bigger market exists in a post-transformers world (particularly given the emerging ability to work with unstructured data). We believe there are giant businesses to be built providing this type of solution for both non-technical end users (e.g., business analysts, financial analysts, data teams) and for technical end users (e.g., ML engineers, data scientists, infrastructure teams). It’s an open question as to whether or not we’ll eventually see a convergence of these personas / stacks into singular offerings, but for now that doesn’t seem to be happening.

Lastly, AI startups are beginning to realize that they need to be talking to customers and hiring experienced business leaders earlier in their development curve. By augmenting their technical talent with leaders who have lived and breathed enterprise sales and blue chip customer deployments, AI startups can move towards Enterprise Readiness faster and set product roadmaps appropriately to make sure they’re building solutions that buyers are actually looking for (sometimes it’s worth pushing off an extra 1% of performance to pull forward slicker UI/UX that customers can actually use).

What are we looking for?

In summary, we believe that the combination of a massive increase in demand for AI combined with what is at best a very messy current set of offerings means that there’s a huge opportunity for entrepreneurs to build enduring businesses in this category. As we think about what we’re looking for, we would identify the following:

End-to-end offerings that make it seamless and easy to build ML applications for both power users and non-technical users (think one stop shops)

Businesses well positioned to benefit from the emergence of the nascent “unstructured data stack” (e.g., SQL for unstructured data)

Data platforms that can help unclog the data glut that exists within ML teams (across data quality, governance, and orchestration)

Very high technical bar solutions that require world-class expertise to build (e.g., world leading researchers and their students emerging from academia, teams leaving cutting edge AI research orgs, teams with experience building mission critical infrastructure in Big Tech)

Across the board, we’re focused on deeply technical Founders who are world leaders in their respective spaces AND who are customer and product obsessed. We think this intersection is what’s needed to seize the moment in the explosion in interest in AI that we’re living in

A final note on why we love data infrastructure companies

As an AI-focused investment firm, we are as big believers as anyone that the AI revolution is here to stay, and it’s going to be even bigger than what people are expecting. We believe that just as Snowflake emerged in the Big Data revolution, there are multiple generational businesses to be built in the age of AI. Beyond the secular adoption, there are a few general business characteristics that get investors (such as ourselves) excited about data infrastructure businesses:

Offer a “picks-and-shovels” (yet another overused VC analogy) approach to playing the macro trend

Once adopted, they often become mission critical parts of organizations with high switching costs (sticky and recession proof)

Often become “need to have” offerings as markets mature, where the leading providers become industry standard (pricing power)

In some cases can get access to large volumes of customer data which can in turn be used to train their own offerings (data moats)

Benefit from the ongoing demand shift towards cloud agnostic offerings as customers aren’t interested in being locked into a single global cloud provider (defensibility from Big Tech)

If nothing else, we’re very excited about the space and what’s to come. If you’re building in ML infra, data infra, or just AI in general and would like to reach out to chat and share notes, our inboxes are always open!

One analogy I thought of is the following:

If LLMs can be thought of as coffee, then we live in a world where we do not have great tools for coffee bean picking, separating, processing, grinding, and making. As a result, it takes too long to brew a cup of coffee.

This means those who can make a great E2E easy-to-use coffee processing platform for a Tim Hortons or Dunkin Donuts (enterprise clients that value efficiency) will have strong demand, high stickiness, and possibly pricing power. At the same time, a best-in-class coffee bean separator, the data labeling of the coffee business, will be highly valued by a high-end espresso bar (technical companies that want a sophisticated tool).