Unpacking why AI Companies funding rounds are so expensive

Or, is this the biggest VC funding bubble since the dot-com crash?

As anyone who hasn’t lived under a rock lately has noticed, AI startups are HOT. While the broader venture investing market has significantly contracted over the last twelve months and VC fundraising just hit a nine year low, it seems that everyone and anyone is trying to invest in “generative AI” companies these days.

Stability AI raised a $100M round at $1B Post-Money valuation in October. Anthropic is in the late stages of raising $300M at a $5B Post-Money valuation right now. General Catalyst is allegedly leading an investment round into Adept, and A16Z is allegedly leading a $250M investment round into Character.AI, both at $1B Post-Money valuations. What is most shocking about all of the above fundraising rounds, however, is that all of these businesses are pre-revenue.

Never in venture history have pre-revenue businesses raised this much money at such jaw dropping valuation multiples (#DIV/0!), and this is happening in the face of the biggest technology valuation pullback of the last twenty years. What gives? Is this simply the result of a record number of VC’s trying to deploy a record amount of dry powder in short order? Is “Generative AI” the biggest bubble since the dot-com crash? Or, is there actually something fundamentally different about AI businesses that is driving a de-coupling from traditional valuation metrics and the broader macroeconomic conditions?

While there is an outsized amount of buzz and frothiness in the AI universe today, I would make the argument that a fair amount of this is actually being driven by the underlying economics of AI businesses. What’s the primary culprit here? I’ll give you a quick hint:

Returning to Valuation Math

In my last post, I mentioned that early stage venture valuations tend to be driven by the amount of funding that a Company needs in order to reach their next milestones. Traditionally, this math was fairly simple:

Burn rate = salaries + overhead (rent, software licenses, employee laptops, etc.)

Runway (typically) would be two years

At the Seed stage, the “milestone” required to ultimately raise a Series A was $1M of ARR (while showing sufficient growth)

In practice, this used to mean that a Seed round would typically shake out to be a ~$2M investment $6-8M Pre-Money Valuation. Recently, these numbers have probably crept up closer to $3-5M rounds ($10-20M Pre) as startup salaries have increased. However, for AI businesses, there is often a third variable at play in the burn rate: compute / model training spend.

GPT-3 originally cost $12M to train from scratch (ignoring all prior R&D spend). While the cost of training a model of this quality has come down significantly, annual spend has not as AI companies continue to push what is the cutting edge forward (we’ve heard that the training spend for GPT-4 has been ~$40M). So far, as has been well documented, the big early winners in the AI space race have been Companies like Nvidia who power model training. I suspect these businesses will continue to feast on VC funding rounds, but eventually, most of the value creation will shift to AI companies themselves.

While AI startups don’t need to compete head-to-head with OpenAI, they do need to figure out a way to provide comparable levels of quality. Often, this means training their own models.

The Cost of Funding Foundation Model Companies

If a business is looking to build their own model (in whichever domain they’re targeting), they’re often referred to as building “Foundation Models”, or Foundation Model Companies, which build their own Transformers-based models in-house. I would consider all of the AI Companies mentioned so far in this post (OpenAI, Stability AI, Anthropic, Adept, and Character.ai) to be Foundation Model Companies. We’re lucky at Radical to have invested in several of these businesses: Cohere, Covariant, Twelve Labs, Hebbia, and You.com, to name a few (not to mention several still in stealth). Our belief is that these businesses are building generational businesses in each of their own domains.

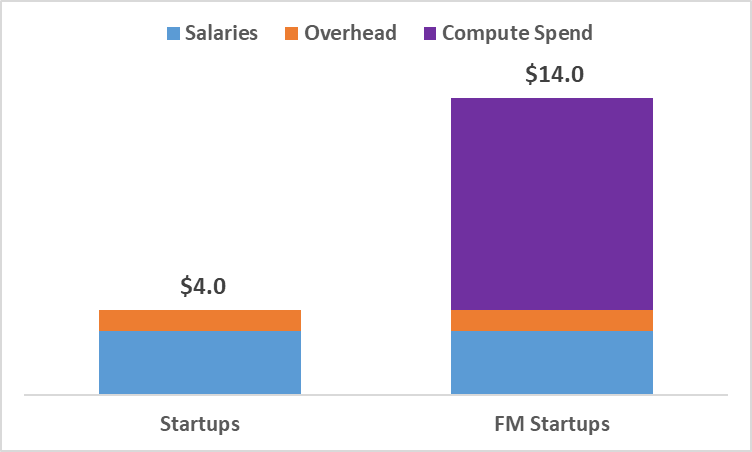

In addition to the businesses in our portfolio, we have been lucky enough to meet with dozens of companies building Foundation Models for their own domains. One thing that’s consistent across all of these players, is their need to spend large amounts of cash each year on compute spend, regardless of their current revenues. In general, at the Seed / Series A stage, we’ve seen businesses in this category expect to spend $4M-10M per year on Model training once they’re fully ramped (not including serving costs). As one can imagine, this throws a fairly large wrench into the VC valuation math noted above. Assuming we take the midpoint of the above figure, and that it takes a Company ~6 months to be fully ramped, the “burn rate stack” (is that a real term?) would look something like this for two years of runway:

As one can imagine, this creates a massive shift in what an early stage venture valuation would look like. Leveraging the framework we laid out last week (25% round dilution), if these example businesses got funded, the traditional startup would raise at $12M Pre / $16M Post, and the Foundation Model startup would raise at $42M Pre / $56M Post: a bizarre outcome for what is clearly a less capital efficient business.

Deeper Down The Rabbit Hole

Beyond increased burn rates, however, Foundation Model companies also tend to have different milestones than traditional startups. Unlike a traditional startup, which can simply write code, ship a product, and iterate on customer feedback, Foundation Model companies need to spend more time building and training their models in order to get a product to a position in which it’s viable and ready to be used. Often, this can take multiple years, millions of dollars (or, in the examples in the first paragraph hundreds of millions of dollars), and several iterations before products are good enough for Companies to charge customers to use them. In short, when we think about milestones, it’s not fair to apply the traditional startups metrics listed above to these companies. Almost none of these companies will have $1M of ARR at the time of their Series A, and many will spend tens (or hundreds) of millions before charging their first customer anything.

In practice, we’ve seen these types of Companies target “product milestones”, instead of revenue milestones, as they look to raise Seed and Series A rounds. As opposed to just throwing money VC money in the fire (I’m look at you, 15-minute grocery delivery), these milestones attempt to represent some way to keep Companies accountable as they progress and grow. A Foundation Model company that cannot hit any of it’s own relevant milestones (e.g., world-class performance in their domain) will not be able to raise a subsequent funding round, the same way that a traditional SaaS company won’t be able to raise if they miss their revenue targets.

What about what you said last week? What’s with the inconsistency?

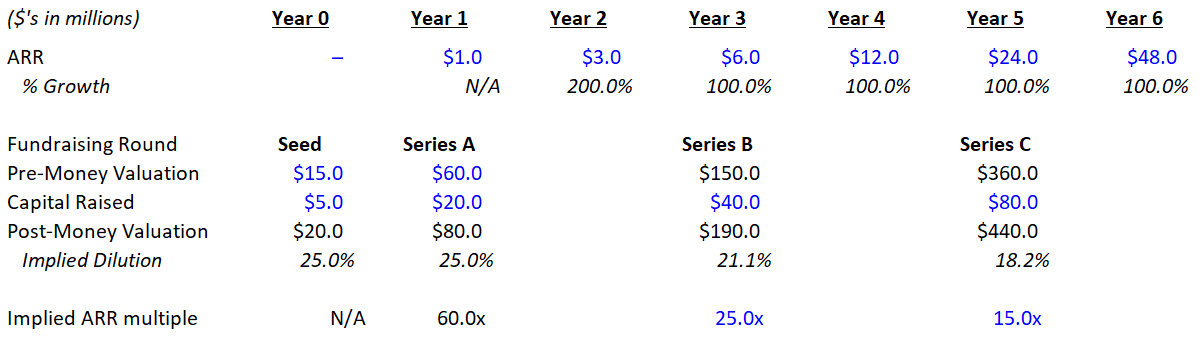

Yes, last week I made the opposite point to this one: “Eventually, the math is going to matter” (I think that’s the first time I’ve ever quoted myself). Let’s revisit the good outcome from last week (Scenario A):

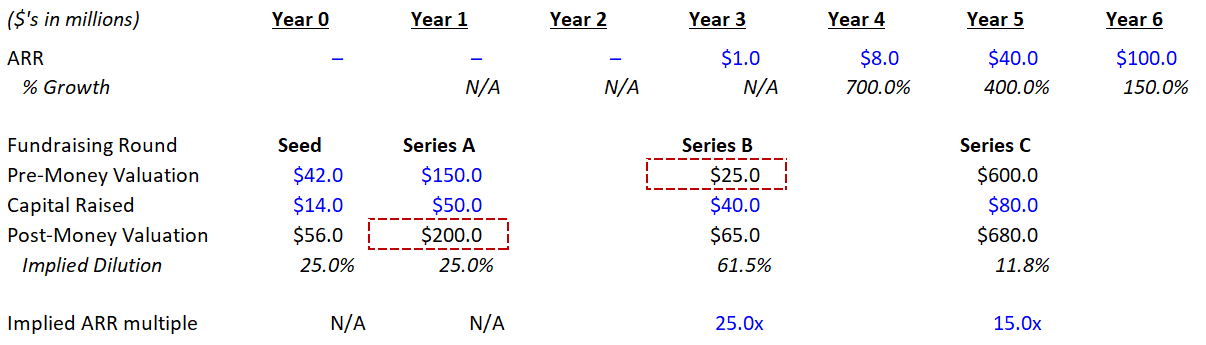

In this example, this Company raised large Seeds and Series A’s, and therefore needed to grow extremely quickly to raise a successful Series B (in this case, get to $6M of ARR). If we apply the same principles, but to a Foundation Model business (which likely won’t have any revenue until ~Year 3), the math quickly breaks:

Yikes - a down round going from $200M to $25M would clearly kill a business. Are these really just awful businesses? Or is this a similar situation to hardware businesses, which have long been debated as not venture-backable due to their capital intensity (just search “hardware is hard”)?

I would make the argument that Foundation Model businesses are actually in a different boat altogether: while they’re capital intensive up front, they CAN scale at rates fast enough to justify the capital investment. As some folks might’ve noticed in the above example, the Foundation Model business actually passes the Software business in ARR in Year 5, and therefore raises at a higher Series C valuation. If this hypothetical company did manage to survive, it would become the larger (and more valuable) business in the long run.

The Magic of Exponential Growth

I already know what you’re thinking: This is a ridiculous example: Companies don’t 8x revenue YoY. Can’t anyone just hardcode in higher growth figures to make the math in any model work? The answer to that is obviously yes, but I think this example is enough of a prompt to get the ball rolling. If you’re going to participate in investing in these businesses, you have to believe that they’ll grow faster than businesses ever have before.

Professor Albert Bartlett famously said that “The greatest shortcoming of the human race is our inability to understand the exponential function”. Professor Albert Einstein famously said that “Compound interest is the eight wonder of the world. He who understands it, earns it…. he who doesn’t… pays it” (That’s right, TWO quotes from Professors this week!)

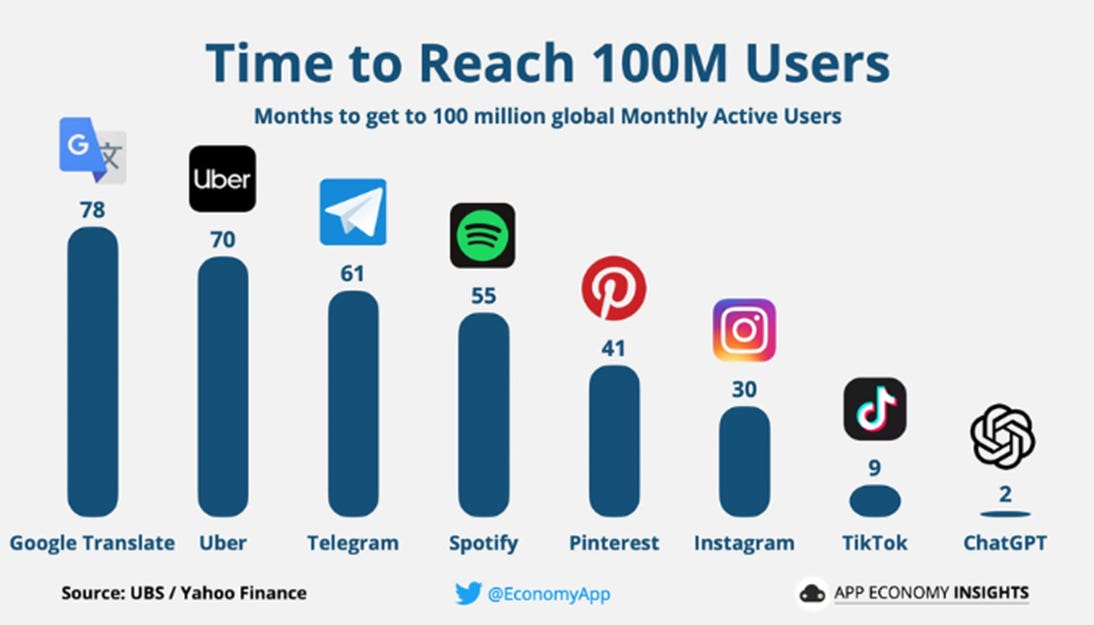

The simple point being communicated here is that exponential growth is a big deal, and is often an unintuitive concept to wrap our brains around (who else remembers the Penny that doubles every day for a month vs. million dollars fable from elementary school?). It is exceptionally rare to actually see it in action, but I believe we are already seeing it in the rate of AI adoption:

Above is a graphic that outlines the number of months it took for ChatGPT to reach 100 million users globally (which I find to be even more impressive than the widely cited graphic outlining ChatGPT’s timing to reach one million users). It’s almost unbelievable how viral the adoption of ChatGPT has been, and I’m of the opinion that we’re still in the very early innings of what AI will ultimately be able to do, and think we’re going to see many more products reach similar (and perhaps even more impressive) adoption numbers given the quantum leap forward AI is promising.

As we see unprecedented growth in these types of technologies, I think that we also need to be prepared to see unprecedented growth in the successful startups that emerge. For these startups, gone are the years of “triple, triple, double, double” breakout venture growth: I think we’ll see a new, higher standard emerge for the very best businesses.

Putting it all together, my current perspective (and clearly, that of other VC’s as well) is that Foundation Model companies represent a “deeper J-curve” (or “hockey stick” for my fellow Canadians) than what we have seen in past startups, as more capital is required up front to unlock exponential growth on the back end.

This time is different

There are two major points here that you have to accept as true, however, in order to believe this hypothesis:

AI solutions are “10x better” than legacy software solutions and are going to grow much faster than traditional SaaS startup benchmarks (i.e., faster than 2-3x YoY). In this scenario, you’d have to believe that the shift towards AI will be the disruptive “platform shift” that we see in the Technology space once every 15-20 years (shift to internet, shift to mobile, etc.).

The sheer cost and scarcity of compute resources will create a strategic moat for startups who raise rounds quickly and train their models first. While the cost of compute is decreasing, annual compute spend is not. Historically, the “capital as a moat” strategy has never worked well (again, 15-minute grocery delivery), but traditionally that’s money being spent on sales and marketing promotions. If compute spend is more defensible, it may become a strategic advantage (similar to how building a factory can be a durable strategic advantage for manufacturers)

If you don’t believe either of those will be true, I think it’s safe to say that you’d disagree with the above hypothesis. Stating that “this time is different” is widely pointed to as one of the one of the most common investing pitfalls in history, so if you’re going to make a bet on that, you’d better REALLY believe it.

So what does it all mean?

TL;DR: AI businesses that build their own models (Foundation Models), are more capital intensive upfront, but offer the promise of exponential growth in the long run. So far OpenAI’s ChatGPT is the only real example of that coming to fruition.

As I think about what this all means, a few things come to mind:

If these businesses DON’T end up experiencing outsized growth, they’re SOL. Many of them will die and we’re likely going to see some of the biggest blowups in VC history in the coming years (note to our LP’s: none of this will ever happen to one of our Companies because we’re very smart and never make mistakes)

The one’s that DO execute on the promise of exponential growth will reach a size and scale that almost no startups have before. For the first time in last decade or two, it feels like the current Big Tech companies are actually vulnerable to disruption (see: Alphabet losing $100B in market cap in a single day after investors worried they were falling behind in the AI race)

The “deeper J curve” hypothesis requires later stage funding rounds in this category to be executed using the same principles of early stage venture rounds, with valuations disjointed from business fundamentals. While this sounds like a tall order, so far, investors seem to be willing to play that game (it’s worth noting, though, that many of the funds leading these rounds are the growth investing arms of traditional VC funds, as opposed to pureplay growth investors)

While it has historically been disregarded as unsustainable, there is likely some validity to the “capital as a moat” strategy that has always been widely debated. As potential employees and customers acknowledge the outsized risk in the category, we’re increasingly seen them drawn towards companies that have already raised massive venture funding rounds and therefore have real runway (I would rebrand this one as the “Field of Dreams” fundraising strategy: if you build it, they will come)

Inevitably, we’re bound to see significant amounts of disruption in the coming years with increasing amounts of AI adoption. While I generally believe that AI will bring more good than harm into the world, there will be legacy businesses that don’t survive the transition. I suspect most businesses with strong distribution advantages (e.g., proprietary sales channels) are the least likely to be disrupted

A brief note on other types of AI businesses

It should be noted that most AI businesses are not Foundation Model businesses themselves, and such don’t require the same level of capital intensity. Some businesses are purely Applied AI offerings that leverage other Foundation Models technology and use it to power their tailored offerings in specific end markets (e.g., Marketing Copy Generation). These businesses can sometimes experience the best of both worlds and benefit from minimal capital intensity AND exponential adoption (e.g., Jasper AI), but sometimes struggle with building defensibility around their business / preventing churn.

Others take a midpoint approach, and focus on significantly “Fine Tuning” a Foundation Model’s technology to their specific customer or use case, often with a unique and proprietary dataset: mitigating some of the capital intensity while still maintaining the defensibility of there offering.

Others still are building Tooling around Foundation Models (LLMOps / FMOps, etc.), and don’t need to worry about any model training themselves, but can also surf on the exponential growth in the broader category (these are often referred to as “picks and shovels” plays on the trend). These are experiencing viral developer adoption right now, but there’s also an outstanding question about how durable these offerings are in the long run, and if Foundation Model businesses will just replace these tools with their own.

What we’re seeing, however, is all three of these business types trying to raise at Foundation Model valuations given the hype in the space: which from my perspective, doesn’t make sense given the lack of need for compute spend. As tourist investors enter the space, however, we suspect that several of these businesses will get funded and may fall into the valuation trap that we spoke of last week. As exciting as the space is, I’m expecting a lot of current vintage Companies to go to zero in the coming years.

That’s it for this week - as always, always appreciate getting your feedback and constructive criticism from any of the above!

good article. I think there are a lot of AI-first companies out there, not just FM companies, that have been caught being compared to SaaS companies by VCs. I collected data from my peers two years ago and it showed that, on average, it took an AI-first company $27M to get to $1M ARR. A capital efficient SaaS company needs less than $1M to get to $1M ARR. So the AI-first company shows up to the next round looking like the most capital inefficient business in history when in fact you've done a pretty job. One thing I would add is that the cost difference between an AI-first company and a typical SaaS company isn't just compute. I've found that AI-first companies need to have larger engineering teams (sometimes 2x-3x the size). And these extra engineers are the very expensive kind. so take the R&D expense of a typical SaaS company, double it, and THEN add on all the compute costs of building a FM.

Good thoughts Ryan. As a startup that has been working in Generative AI before it was called that, a key learning was the shift away from cloud. As a small startup, pretty bootstrapped, we decided to on-prem our GPU's, thanks to our relationship with Nvidia - the FM/Generative AI world may trigger such a transition as cloud costs become unbearable. Obviously other factors including power consumption costs will need to be factored along with maintenance of GPU's, however we have not looked back since getting off the cloud for our GPU needs.