When will AI do my job for me?

... and what does this all mean for the next generation of college graduates?

When we think about notable inventions and innovations throughout history, they’ve almost always been accompanied by the disruption / replacement of some jobs. Typically, the employees in these jobs have been blue collar workers: factory workers, farmhands, lumberjacks, etc. Even as we look back at the most notable innovations of the digital era, these have typically made white collar workers more efficient, without really displacing their jobs entirely (or at least, not the bulk of them).

As AI applications have begun to proliferate in recent months, however, the nervous energy emanating from white collar workers in skyscrapers around the world is palpable. This time, it seems like some of the highest paying jobs in the world are at risk of being disrupted (will all the Excel shortcuts I’ve learned over the years become worthless??).

Fear not, keyboard warriors, for there is a flipside to this argument as well: what happens if AI applications take away the very worst parts of our jobs, leaving behind only the intellectual, creative, and relationship-based bits? I certainly would pay a lot of money to never have to reorganize logos in PowerPoint ever again (*shudders*).

Targeting monotony: where does AI work best?

Today, AI systems work best at automating repetitive and monotonous tasks. Anything that is even remotely close to being “templated” is a good target for automation, particularly if the workflow is replicable and the outputs are measurable. These specific characteristics allow for the training of models on specific tasks / datasets (fine tuning), and enable quicker and simpler feedback loops for improving models (reinforcement learning).

We’ve already seen these factors in action as the first generative AI applications that experienced meaningful traction were in the space of marketing copy generation. This workflow is fairly commoditized, replicable, and has clearly measurable results (e.g., page views / engagement). While this was the first generative AI category to catch fire, it certainly won’t be the last. Moreover, just because copy generation was the easiest category to deploy solutions in, doesn’t mean it will end up being the largest.

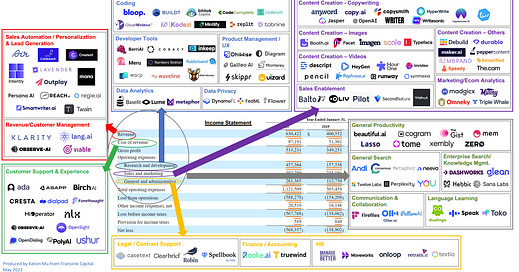

As thoughtfully laid out in the below graphic by our friend Kelvin Mu at Translink Capital, almost every line item of a Company’s income statement now has a Generative AI tool that exists to serve it:

While the marketing tools are ahead of the pack in terms of automatability (and therefore customer traction), many of these other companies are beginning to take off as well. Broadly speaking, I think of each of these Generative AI companies as “Horizontal” Applied AI businesses.

From side-to-side to up-and-down

In the world of B2B SaaS, the largest companies were first built as horizontal applications that cut across industries. Generally speaking though, they don’t always solve the specific needs and nuances of the various end markets (or “verticals”) that they service.

As a result, the era of horizontal SaaS gave way to the era of vertical SaaS: tailor-made solutions for verticals that took into account the specific nuances and bespoke workflows of entire industries. While these companies weren’t always as large as horizontal plays (you’re often constrained by the TAM of the end market you service), they were both more plentiful in existence, and were typically stickier business models. Many VC’s have built careers backing vertical SaaS businesses in niche end markets, given the clearly effective playbooks and decades long macro trend towards digitization.

Generally speaking, however, vertical SaaS applications only made employees jobs easier, and typically didn’t displace many workers. Returning to the realm of AI, we’ve seen horizontal applied AI businesses remove the need to hire certain categories of workers entirely. If history repeats itself, what does this mean for the inevitable emergence of “Vertical” applied AI businesses?

The canary in the coal mine: attorneys

We mentioned above that AI works best on monotonous and repetitive tasks. But there is also a specific type of data (or modality) that current AI models excel at working with: language. Put two and two together, and there is no more monotonous and repetitive language task that I can think of than the day-to-day work in the field of corporate law (apologies to my many family members who have dedicated their careers to the profession: I still respect your choices).

It’s no surprise, then, to hear that the first real vertical applied AI businesses that have begun to take off are in the field of law. Historically, there was doubt that efficiency tools could be sold at scale to law firms, which thrive on the concept of “billable hours”, in which they charge clients for the number of hours in which they work on a specific case / file. Why would they even be incentivized to work fewer hours for their clients when the number of hours worked was their lifeblood? Even if they took on more clients, their take-home would be the same.

Turns out, the answer is a bit more nuanced than that. While many hours are indeed billable, there are some categories of labor which are not entirely billable. Furthermore, the big unlock here has been that some hours are more painful, commoditized, and ultimately less lucrative for law firms than others. Specifically, hours affiliated with the most junior employees: associates.

In an industry in which competition has never been fiercer and there’s massive downward pressures on fees (it seems like fee escalation has finally hit a breaking point as clients have begun to push back), this has become a natural place to cut costs. Given that the real differentiation amongst law firms tends to be at the senior levels in which lawyers are paid for their experience, creative thinking, and personal networks, finding ways to automate monotonous associate level work has emerged as the leading solution to the problem. While associates themselves are cheaper to employ, the sheer volume of them makes them a very meaningful expense category (and therefore, TAM).

It should be no surprise to learn that this is where AI applications have begun to proliferate and drive real traction: the combination of a dire need to cut costs and the perfect raw materials to deploy modern AI has proven to be a real recipe for success. In recent weeks, we’ve heard of multiple LegalTech startups who have seen inflections in revenue growth as they’ve deployed generative AI products that help to automate this workflow.

Where to next? When am I going to be out of a job??

Using the the world of law as a case study, I’ve been thinking about which other categories are the next dominoes to fall. To keep things comparable, I’ve tried to focus on “professional services” businesses, which share similar characteristics to the real of corporate law (i.e., other white collar “firms”, LLC’s, and partnerships).

While this is still very much a work in progress, below are some of my early thoughts on what a market map of the landscape looks like, but it’s certainly not exhaustive (obviously, this was pretty painful to build manually… hopefully this will be one the of last market maps I have to build myself):

Using the lessons learned above (monotonous and text heavy = easiest), I’ve loosely ordered the rows in increasing "difficulty” of automation. It’s worth repeating that many of these types of jobs start to look very similar at the most senior levels (compete on experience, creativity, and personal relationships), but at more junior levels, the work looks quite a bit different.

My early hypothesis is that Accounting is going to be the next category of professional services automation. While this work is more numbers / structured data heavy than Law, it’s certainly extremely monotonous and repeatable. Generally speaking, there are objectively correct answers to questions (assets = liabilities + owners equity!!), most tax filings and quarterly reporting exercises look a lot like each other, and the end output is fairly commoditized across the big four firms. Once LLM’s get better at working with numbers and doing basic math, I imagine we’ll see solutions take off in this field (I can’t wait until there’s an LLM that files my taxes for me).

Consulting and Investment banking are both next on my list. Both industries ask junior employees to spend time in PowerPoint and / or Excel in order to produce decks and financial models that look fairly similar to many other materials that have been produced in the past. I think market research, slide building, and the refreshing of historical case studies as a workflow has slightly less nuance to it than financial modelling, so I would say the “associate work” of consultants is probably a bit more commoditized than that of investment bankers, at least on the margin. At the end of the day, I think senior professionals in both of these fields would kill for an AI program that turns their comments for them, immediately and correctly (TBD on whether or not they’ll be able to read MD chicken-scratch).

Lastly, we get to investment firms. Another key variable emerges here: these firms are managing other people’s money. Unlike the above firms which charge fees and are compensated on output, investment firms are primarily compensated on their results. As such, I think there’s more embedded hesitancy to automating some of the processes in these industries. While memo creation and competitive landscaping might be AI generate-able soon, I don’t expect a human to be truly removed from the loop in the near future: even if nothing else, people want someone to blame when money is lost.

Public markets investing and private markets investing are differentiated here by two key factors: firstly, public markets data is typically more easily accessible, and is usually formatted / reported in a more consistent manner than in the private markets. Secondly, in the private markets the workflow tends to be more meetings-heavy, and therefore more lends itself better to more human intuition (e.g., EQ, judgment, trust, relationship building), even at the more junior levels. I don’t think I’m going to convince a founder to take a Zoom meeting with “RyanBot” before they can meet with me anytime soon (I promise that’s just a joke - meeting with founders is the best part of my job… but I could live without formatting their logos in investment memos).

What does this mean for these industries in the long run?

Long story short, I think AI will quickly eliminate the most painful, boring, and monotonous parts of professional services work. What it won’t eliminate, however, is the more differentiated, thoughtful, and strategic work: Human relationships. EQ. Strategic decision making. Dealing with ambiguity. Negotiating. Sales. Management and mentorship.

In an era in which the underlying work product is commoditized, the more “human” elements of work become more important than ever. If I ever did get a “RyanBot” or “Associate.ai”, it would allow me to spend more time doing the parts of my job that I like the most and find the most fulfilling. I think it would also make me a better investor in the long run, as time and energy is freed up for more important tasks.

I am lucky enough, however, to have already been in the work force for some time. What does this shift mean for others who are just entering the workforce now: those who typically earn their stripes by doing the manual work that nobody else wants to do, in exchange for mentorship and valuable work experience?

All professional services industries are described as “apprenticeship businesses”, in which individuals learn the ins-and-outs of the craft from their more experienced superiors. While the grunt work is generally grating and unfulfilling, completing it lends the necessary experience and training to be promoted into the more critical human-to-human work at higher levels.

Almost all professional services orgs are structured like a pyramid: there are armies of junior employees on the bottom, who do the grunt work, and more experienced employees at the top. Depending on who you ask, the ones who rise to the top are either the hardest working and most talented, or the ones who can survive the grueling work conditions longer than others. Either way, experience and mentorship is needed to fulfill more senior roles. Consider the below graphic which outlines the org structure of a typical investment banking team (which I think is fairly representative of all professional services firms):

Given the “human elements” of the upper levels, I don’t see them being replaced anytime soon. But what happens if AI replaces the need for the bottom layer or two of the pyramid? Where will the future senior leaders come from? In short, I believe that organizations that adopt AI in meaningful ways risk disrupting their talent pipeline and succession planning. Will future investment banks feel comfortable hiring senior leaders who have never built a model or worked on a live deal before?

Even more concerning is what this means for new college graduates. Historically, what the inexperienced had to offer was work ethic, humility, and grit. As these jobs disappear, will we see a particularly challenging job market for new graduates? How can one break into an “apprenticeship” industry if nobody is willing to take them on as an apprentice? I believe these are still unsolved questions.

Key Takeaways

There’s a lot here, but if I try to boil all of my learnings in this article down to a few takeaways, the following thoughts come to mind:

AI is going to have an asymmetric impact on the global economy. For potentially the first time ever, white collar workers are expected to face more disruption than the working class

There’s a vocal debate these days about whether or not it makes sense for every student to go to university / college. If nothing else, I think skilled trades work seems more “AI-proof” than desk work these days

AI works best at replacing repetitive, monotonous, templated, and language heavy labor. The field of Law certainly feels like the first domino of the Professional Services world to fall, but it won’t be the last as AI gets better at dealing with numbers and productivity tools (e.g., Excel and PowerPoint)

Professional Services Fees are bound to compress in many of these industries as costs come down and competition heats up. I suspect adopting AI tools will become table stakes in markets where firms are expected to do more than less

I expect that several massive Vertical-focused Applied AI businesses will be built in this moment of time, similar to the emergence vertical SaaS in the last generation. Much of the differentiation in these businesses comes down to really understanding the pain points and nailing the product / workflow.

I suspect the winners in this category will be built by founding teams that marry deep vertical expertise with deep technological expertise: having only one component will make it hard to nail product and build economic moats

While this likely makes the jobs of current professionals more efficient and more enjoyable, it presents several major challenges for the future: most notably, where will future professional services leaders come from? How can one break into an “apprenticeship” industry if nobody is willing to take them on as an apprentice?

Clearly, something needs to be done to fix this. But is the solution driven by regulatory change, or business change? Will businesses be willing to take on junior employees and train them for multiple years before they can start to add tangible value to their Companies?

Given my career arc, this is a category I’m extremely interested in. If people have thoughts and / or feedback on the hypotheses I’ve laid out above, I’d love to hear them.

As a clear shameless plug, I’m also very interested in meeting more Founders building in these categories. In particular, I’d love to speak with Founding teams that are bringing together vertical expertise and technical AI expertise to tackle these problems today: even better if you have a compelling strategy to build a moat around the business you’re building.

If you or someone you know is building in these spaces today, please don’t hesitate to reach out!

Great analysis – I think for the most part, we'll likely end up evolving to create new job categories specific to AI / Language applications. The next unicorns will be the ones to define those categories – across ethics, interaction and conversational design, data curation, training, psychology, "business programming interfaces", infra, AI law, ... and more. LLMs will kill a ton of jobs that we'll look back on in a few years as "bullshit jobs", and will create new ones that will be domain and application specific, giving current emerging talent (esp. the ones about to graduate into the unknown) a leg up into building the AI future, as the rest is trying to adjust their competencies to the new reality.