One of the most frequently cited critiques of startups is that they don’t have a “moat” around their business. This has become increasingly relevant for Generative AI Companies, which are often dismissed as being “only a thin layer built on top of GPT-3/4” or “just a UI built on top of Stable Diffusion” (both supposed examples of being an unsustainable business).

Like many things in the world of venture, I worry that this statement is often being repeated without the necessary critical thinking about what it actually means. Today, I’d like to dive into what a moat really is, and then analyze whether or not it’s really fair to say that none of these Generative AI businesses have one.

The best offense is a great defense

When investors talk about a Company’s moat, what they’re really referring to is “what is this Company’s defensibility”. In other words, what is stopping other Companies from doing the same thing at a lower price and winning away customers. This is obviously an important characteristic of a business as it defines what a Company’s risk profile looks like: defensible businesses are lower risk and more predictable, meaning they’re much less likely to contract or disappear overnight. Investors obviously don’t want that to happen, so if a Company does not appear to be defensible, investors would have to believe there is a massive amount of upside in a business to believe that the risk-reward profile is attractive. In short, the more defensible a business is, the more valuable it is. Warren Buffett famously branded this concept of defensibility as an “Economic Moat”, which protects the economic castle within (i.e., providing outstanding returns to investors).

However, the idea of defensibility or an economic moat can refer to two different distinct concepts, which are often referred to interchangeably:

Stickiness

Superior value proposition

While both factors are important and valuable, it’s important to highlight the nuance between them: stickiness refers to KEEPING customers, while a stronger value proposition refers to winning NEW customers. These are terms typical used interchangeably when assessing large, mature businesses, but I believe it’s important to keep the distinct nuances in mind when assessing startups, which typically are more focused on growing their revenue base from zero.

Sticky, not icky

While I’ve always thought the term “stickiness” was too colloquial to fit in with the rest of investing jargon, it does benefit from the fact that it’s fairly self explanatory. If a business is sticky, it means that it’s hard and / or annoying for customers to switch to another provider, even if they offer a better price. A few examples of sticky business characteristics include:

Annual or multi-year contracts

High switching costs (e.g., time consuming to change vendors, required down time, employees need to be retrained / have loyalty to current offering, etc.)

Intentional inoperability (e.g., data cannot be ported to a new vendors, integrations / connections have to be rebuilt)

Decoupled payor and decision maker dynamics, wherein the users of a product are not the ones paying for it (e.g., government subsidized businesses in which buyers are not the actual ones footing the bill)

Having any combination of the above points makes a business “better”, but this is particularly notable for companies that already have a large customer base. In practice, stickiness helps to prevent businesses from being undercut or disrupted, and therefore tends to be a lot more important for mature companies (think private equity backed or publicly traded). For startups, this is less relevant, particularly in new markets in which all customers are up for grabs.

Designing an unfair advantage

Having a superior value proposition, on the other hand, refers to a Company’s ability to win new customers. These factors are the most valuable defense to the question of “why can’t someone else go do this”, or “what happens when a wave of other startups emerge in this category”. This is particularly important in hot / buzzy spaces, where new startups emerge every week and run the risk of commoditizing each other.

As we think about startups that can take off and grow at venture scale, these are the types of economic moats that are most compelling and interesting to us. A few examples of moats that drive superior value propositions in the technology / startup space include:

A data moat in which you have access to data that nobody else has

A technical moat in which your team is stronger than anyone else in the world (note: this matters much more for some businesses than others)

Network effects in which increased numbers of customers improve the value of a product

Cost advantages in which it costs you less to operate (e.g., vertical integration)

Size advantages in which you can produce more efficiently (e.g., economies of scale)

Distribution advantages in which it’s easier for you to get your products to your customers

A well known and trusted brand

Too often Companies and investors point to sticky business characteristics when assessing a startups moat, when in reality, the ability to offer a superior value proposition is far more important to growing a low revenue base. While stickiness inherently makes a business better, it is often less important in the early innings of a Company’s success than developing a deep value proposition moat.

Back to Generative AI

In my last post we spoke about Foundation Models, which are companies that are training their own models in their specific fields (e.g., language, video) to use as a point of differentiation. We also briefly touched on the point that the capital being poured into model training may create a moat for these Companies:

“The sheer cost and scarcity of compute resources will create a strategic moat for startups who raise rounds quickly and train their models first… If compute spend is more defensible, it may become a strategic advantage (similar to how building a factory can be a durable strategic advantage for manufacturers).”

I think it’s fair to say that if you believe the above statement is true, Foundation Model companies will have a cost + size advantage (not to mention a GPU accessibility moat) around their businesses. Anyone else looking to enter the industry will have to go out and recreate this to compete against them, and will presumably be playing catch-up for some time.

But what about everyone else building in Generative AI (for example, Applied AI businesses)? Is it really fair to say they don’t, and won’t, have any moats around their businesses? Personally, I think that’s too simplistic of a viewpoint, and is glossing over what we would typically see as moats in traditional businesses. In this article, I’d like to visit three of the most common moats that we see being built by Applied AI businesses.

1. The holy grail of AI moats: a data moat

AI models are defined by their data: they’re definitionally a garbage-in, garbage-out situation. At this point, the sizes of AI models are largely flattening out, but there’s an increasing focus on improving data quantity and quality (sometimes referred to as the Data Centric AI movement). Companies that are able to pull together a proprietary dataset that others don’t have access to will ALWAYS have a massive advantage in the world of AI.

For those interested in diving deeper on this subject, I’ve found Abraham Thomas’ substack post on the economics of data businesses to be an exceptional read. There are a lot of interesting insights into data collection in this piece, but here are a few data collection strategies that we see as being core to AI businesses (loosely ranked in order of effectiveness):

Exclusive relationships: Exclusive access to someone else’s proprietary dataset (think hospital systems or research institutes that have built up their own datasets via brute force over a number of years)

Viral free offerings: By offering a service for free, you can get access to large volumes of user input and feedback on a proprietary basis (e.g., both ChatGPT and Stable Diffusion have now generated proprietary datasets)

Customer data: Many AI companies have structured agreements with customers in which the anonymized customer data and usage can be used to train master models (without the readable data itself ever leaving customer servers)

Brute force data enrichment: Aggregating a large amount of commoditized data and then enriching it yourself to make it more valuable (e.g., labeling)

Synthetic data: Some businesses are themselves in the business of “creating data”, though, this doesn’t mean other businesses can’t create it as well (or pay a vendor to do so)

There are other examples, but these tend to be the most commons ones we see in the the world of Applied AI. If folks have perspectives on other sorts of data collection strategies that have worked well, I’d love to hear about it.

2. Easy to claim but hard to prove: a technical moat

There is one thing about AI that (for now), differentiates it from traditional software: it’s really hard. Modern AI (post Deep Learning revolution) has only really been around for a decade, and it has only become a mainstream discipline in the last few years. Much has already been written about the global technology skills gap, but in no place is that felt more acutely than in AI. In McKinsey’s recent state of AI report, AI data scientists were listed as the single most difficult position to fill in technology departments, with machine learning engineers not far behind:

The reality of the situation is, AI is new, and it’s evolving rapidly. There are simply not enough people in the world who know how it works, how to build with it, and how to stay on top of everything. For this reason, we’re seeing AI companies breakout ahead of competition when they have stronger technical teams who can keep pace with the speed of innovation in a space, and have the credibility to recruit and retain top tier talent from the elite institutions around the world.

The best example of this is perhaps seen in the buzziest AI subcategory of them all, Large Language Models (LLM’s). Notably, LLM providers DO NOT have access to a proprietary dataset, as they’re all trained on the same corpus: the internet. Instead, they’re competing with one another on the basis of technical competency, where the very strongest NLP teams have been able to build the very best products. We’ve seen this at OpenAI, Anthropic, and Radical portfolio company Cohere, which have all dramatically outperformed their LLM competitors despite a commoditized data corpus.

For Applied AI businesses, however, not every use case will require a differentiated technical team. For example, we’ve seen the early winners in the marketing copy space emerge from non-technical backgrounds, where nailing product and customer experience has proven to be more critical than technical capability. The important distinction here ultimately becomes a question of “does this really matter” in this use case. If you’re able to prove that it does really matter, and you have the strongest technical team in the space, we view that as an incredibly deep and defensible moat.

For highly complex Applied AI use cases (e.g., ML infrastructure) or use cases where the last 1% of accuracy really matters (e.g., healthcare), we see this as being critically important. For use cases that are less complex and less mission critical (e.g., sales and marketing), we see this as being less relevant, and more challenging to believe in a real moat being create.

3. The one that favours incumbents: a distribution moat

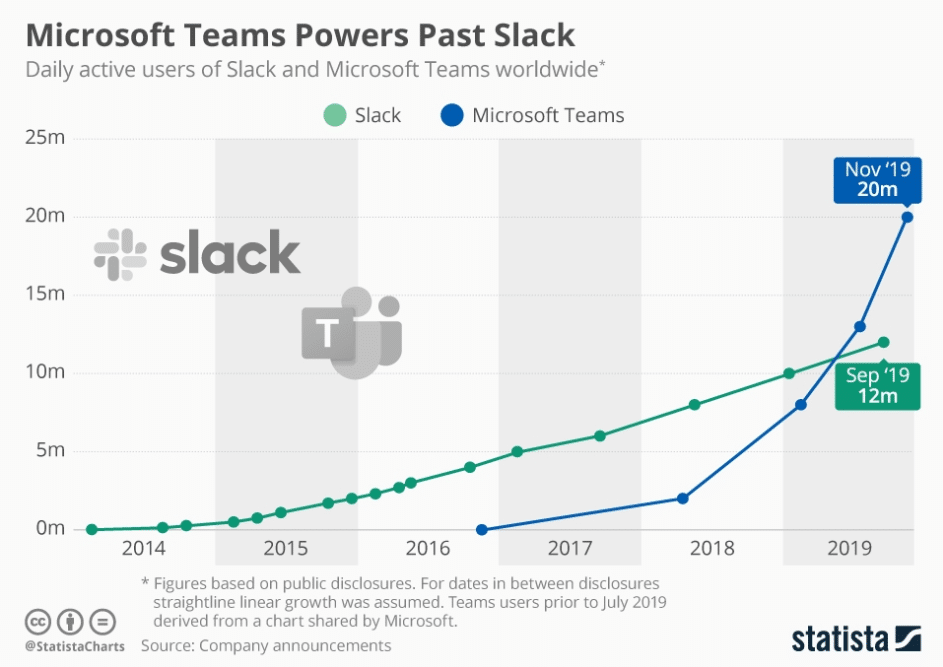

On the flipside, any AI founder needs to be keenly aware of the moat their more established competitors might be able to use against them: a distribution advantage. Below is one of my very favorite charts from Statista that illustrates this point beautifully (albeit in a different industry:

Despite being a diehard Microsoft fan, I will be the first to admit that Microsoft Teams is an awful product (seriously, who chose “Barney the Dinosaur Purple” as the primary colour scheme?). Slack is a significantly more elegant, easy to use, and dynamic product. They also had a ~3 year head start on the Teams product launch, which they used to build up a large customer base and brand awareness before Teams got off the ground. What happened?

The short answer is that Microsoft already had relationships with over 1 billion Office end users and 1 million different companies worldwide. As soon as they offered up Teams to their customer base (and were able to bundle it with a video product), they were able to explode past slack in total userbase (Teams recently hit an astounding 270 million daily active users in 2022).

Slack was still a major success story as it was acquired by Salesforce for $27.7B, so I’m not sure anyone feels bad for them. That being said, organizations are reacting faster than ever, with several massive software business quickly building out a strong Applied AI offering. Two of the most notable examples here are Notion (30M+ users) and HubSpot (167K companies as customers), who have both launched well received AI products in recent weeks. For anyone looking to build an AI-native note taking application or CRM tool, that sales pitch just got a lot harder.

Part of the lesson here for AI startups is that if your competition has a strong distribution advantage, you need to move very quickly to build up a following before they wake up. Microsoft has already claimed that they’re going to further implement OpenAI products into PowerPoint, but AI-native startup Tome has already hit 1 million users just 134 days after launching: it’s hard to imagine them going away anytime soon.

In summary, get digging

It goes without saying that building a deep moat is more important than ever given the speed of innovation in the world of AI. While implementing a business model that makes your business stickier is always a good thing, that doesn’t really help you to go out and acquire new customers, particularly in the customer attention landgrab that we’re experiencing right now.

For Applied AI businesses, figuring out ways to develop data moats, drive technological differentiation, and stay on the right side of distribution advantages seem to be the early strategies to building strong businesses in this period of massive distribution. Going forward, I expect to see more and more businesses exploring creative ways to leverage the other types of moats (in particular, network effects, which I believe have yet to be really leveraged here) in order to compete with the incumbents of the world who are working to transition into the age of AI.

If you have any feedback or thoughts on items I might’ve missed in this post, I’d love to hear them, as always. Thanks for reading!